Project Description

Focal surface displays address a key perceptual limitation of conventional head-mounted displays (HMDs), the inability to display scene content at correct focal depths. Conventional HMDs are limited to fixed-focus accommodation cues determined by eyepiece focal length. Any scene content with a virtual distance from the viewer different than the fixed focal distance of the HMD screen will have binocular disparity cues (vergence) in conflict with focus cues (accomodation). This vergence-accommodation conflict (VAC) prevents scene content from appearing sharply in focus because the human visual system tries to match eye focus distance to vergence distance. This effect may also contribute to viewer discomfort.

Previously proposed solutions include displaying multiple focal planes, using integral imaging techniques to synthesize lightfields from scene content, or creating digital holograms. All of these approaches suffer from some combination of low fidelity accomodation cues, low resolution and field of view, and practical infeasibility. Focal surface displays, on the other hand, are intended to produce high fidelity accommodation cues for natural scenes using off-the-shelf optical components. Using a phase-only spatial light modulator (SLM), placed between the display screen and eyepiece of an otherwise conventional HMD, the focal surface display produces continuously variable focus across the display field of view. This dynamic, freeform lens is optimized to produce physically realizable focus cues matching the virtual scene depthmap. Aberrations and other distortions introduced by the SLM are corrected for in a secondary blending optimization based on a raytraced forward model of the system.

Video Introduction

Publications

"Focal Surface Displays"

N. Matsuda, A. Fix, D. Lanman

ACM Trans. Graph. 36, 4, Article 86 (July 2017)

[PDF]

News

- Oculus Research’s focal surface display could make VR much more comfortable for our eyeballs

- VR has a hard time showing you things up close, but Oculus might have a fix

- Bringing Virtual Reality Into Focus

- Oculus Research to Present Focal Surface Display Discovery at SIGGRAPH

Images

Focal Surface Displays

A focal surface display augments a conventional head-mounted display (HMD) with a spatially programmable focusing element placed between the eyepiece and the underlying color display. This design depicts sharp imagery and near-correct retinal blur, across an extended depth of focus, by shaping one or more virtual images to conform to the scene geometry. Our rendering algorithm uses an input focal stack and depth map to produce a set of phase

functions (displayed on a phase SLM) and RGB images (displayed on an OLED). Shown from left to right are: a prototype using a liquid crystal on silicon (LCOS) phase modulator, a target scene, the optimized focal surfaces and color images displayed across three frames, and the resulting focal stack, sampled at a near and far focus of 4.0 and 0.0 diopters, respectively.

Accomodation Capable Display Continuum

Focal surface displays generalize the concept of manipulating the optical focus of each pixel on an HMD. Configurations (d,e) augment a fixed-focus HMD (a) with a programmable phase modulator placed between the eyepiece and display. (b) Varifocal HMDs use a globally addressed tunable lens. (c) Multifocal displays may use a high-speed tunable lens and display to create multiple focal planes. (f) In contrast, certain light field HMD concepts fall at the other end of this spectrum, using a finely structured phase modulator (a microlens array) placed near the display. (d) In this paper, we consider designs

existing between these extremes in which a phase modulator locally adjusts the focus to follow the virtual geometry, generalizing varifocal and multifocal concepts. (e) Similar to multifocal displays, multiple focal surfaces can be synthesized with high-speed phase modulators and displays.

Accomodation Capable Display Assessment

Accommodation-supporting displays are assessed relative to optical and perceptual criteria. †Resolution is listed according to theoretical upper bounds (e.g., diffraction limits). ‡Field of view, eye box dimensions, and image quality depend on implementation choices: listed values correspond to the performance of prototypes in the cited publications, being indicative of current display technology limitations. Note that “moderate” resolution, field of view (FOV), eye box width, and depth of focus (DOF) are defined, respectively, as: 10–20 cycles per degree (cpd), 40–80 degrees, 5–10 mm, and 1–3 diopters. Excursions above or below these ranges are shaded green or red, respectively. Form factors are not compared, as most concepts are currently embodied by early stage prototypes not optimized for size or weight.

Optical Diagram

A focal surface display is created by placing a phase-modulation

element between an eyepiece and a display screen. This phase element and the eyepiece work in concert as a spatially programmable compound lens, varying the apparent virtual image distance across the viewer’s field of view.

Toy Scene

A focal surface decomposition is presented above for a simple scene, containing: a background fronto-parallel plane at 1.0 diopters, a foreground fronto-parallel plane at 4.0 diopters, and a slanted plane spanning 2.0 to 4.0 diopters. (a) A single image from the target focal stack. (b) The target depth map. (c) A two-surface decomposition is compared to the target depth map for a profile taken along the middle row of the target imagery. (d,e) The color

images associated with each focal surface are shown, using the linear blending method of Akeley et al. [2004]. Note that this decomposition produces clearly delineated “foreground” and “background” components. (f,g) The color images associated with each focal surface, using the optimized blending algorithm

presented in Section 3.3. Note that, similar to Narain et al. [2015], the resulting color images present high spatial frequencies closer to the focal surface near where they occur in the target scene. We emphasize that, as with other multifocal displays reviewed in Section 2, time-multiplexed focal surface display reduces brightness, due to color image components being presented for a briefer duration than that occurring with a fixed-focus display mode.

Depth Reconstruction Assessment

Focal surface displays achieve lower depth map approximation errors, using less time multiplexing, than prior multifocal methods. As a result, such displays can support higher resolution image content (see Section 3.2). The upper row visualizes the optimized focal surfaces ranging from 0.0 to 5.0 diopters, abbreviated “D”. The lower row depicts the resulting depth map approximation errors in diopters. For a fixed focus design, the virtual image is positioned at

0.5 D. Following Narain et al. [2015], the fixed multifocal display employs four planes evenly spaced from 0.2 D to 2.0 D. The adaptive multifocal display and the focal surface display are optimized using k-means clustering, following Wu et al. [2016], and the methods in Sections 3.1 and 3.2 to position planes across a 5.0 D span, respectively. Focal surface displays show significantly fewer depth errors, with errors decreasing as more surfaces are used.

Middlebury Dataset Evaluation

Focal surface displays represent natural scene depths with few image components. Box plots compare the depth map errors using

the denoted methods with the Middlebury 2014 dataset [Scharstein et al. 2014]. The bottom and top of the whiskers indicate the 5th and 95th percentiles, respectively. The bottom, middle, and top of the boxes represent the 1st quartile, the median, and the 3rd quartile, respectively. Focal surface displays produce fewer depth errors, especially when fewer planes are used.

Custom Dataset Evaluation

We repeat the assessment with the database of rendered scenes described in Section 3.2. Note that the trends are repeated, but, due to the larger depth ranges in this database, additional virtual image surfaces are required with prior fixed and adaptive multifocal displays

Perceptual Assessment

Focal surface displays depict near-correct retinal blur with fewer virtual image surfaces than prior multifocal architectures. Following Figures 5–7, focal surface displays produce virtual images that more closely align with the scene geometry. As a result, sharply focused imagery can be obtained throughout the scene, reducing focusing errors occurring with prior fixed and adaptive multifocal displays. In this figure, we quantitatively assess the focal stack reproduction

error following the method of Narain et al. [2015]: the lower row depicts the maximum per-pixel probability of detecting a difference between the target and reconstructed focal stacks, as quantified using the HDR-VDP-2 metric [Mantiuk et al. 2011b]. The corresponding quality predictor of the mean opinion score

(MOS) is listed along the bottom. Note that focal surface displays achieve similar fidelity as prior adaptive multifocal displays, although with fewer virtual image surfaces.

Design Tradespace

The accommodation range of a focal surface display depends critically on the SLM placement. Here we denote, via the labeled plot contours, the virtual image distance zv achieved with an SLM, when used to represent a lens of focal length fp and positioned a distance zp from the eyepiece. Red lines indicate focal lengths beyond the dynamic range of the SLM. Note that these numbers correspond with the prototype described in Section 5.1.

Hardware Prototype

Our binocular focal surface display prototype incorporates commodity optical and mechanical components, as well as 3D-printed support brackets. (a) The prototype is mounted to an optical breadboard to support the comparatively large LCOS driver electronics. A chin rest is used to position the viewer within the eye box. (b) A cutaway of of the prototype exposes the arrangement of the optical components.

Experimental Results

Our prototype focal surface display achieves high resolution with near-correct retinal blur. Photographs of the prototype are shown in the first three columns, as taken by focusing the camera at the indicated distances. The last two columns depict the corresponding optimization outputs, including the phase functions and the color images. Note that optimized blending is applied with three time-multiplexed focal surfaces. The phase functions are wrapped

assuming a wavelength of 532 nm. Note that the results for the lower scene employ linear blending, following Akeley et al. [2004]. See the supplementary video for the full focal stack results.

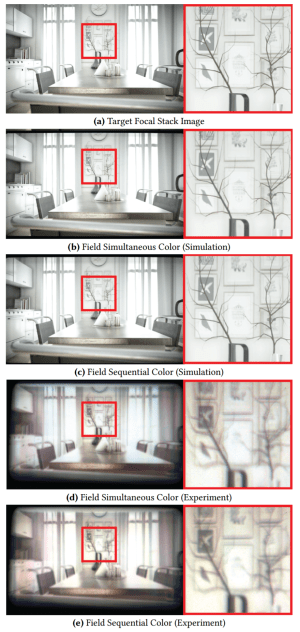

Field Sequential VS Field Simultaneous Display

To minimize time multiplexing, focal surface displays should operate

in a field simultaneous color mode. Following Section 6.1.2, artifacts due to axial chromatic aberration (ACA) may appear in this mode. (a) A target focal stack image. (b,c) Simulations comparing field simultaneous and field sequential modes, using the geometric optics model from Section 3. (d,e) Corresponding experimental results. Note that the contrast of experimental results differs from simulations due to stray light and misalignments that cannot be predicted without more accurate wave optics modeling and calibration, respectively.

Contrast Loss Characterization

The measured modulation transfer function (MTF) of our prototype

confirms high resolution is achieved. Following Section 5.3, the MTF was measured as the system varies focus from 0.0 to 4.0 diopters. Contrast loss is expected as the SLM synthesizes shorter focal lengths, due to the increased stray light from phase quantization and phase resets (see Section 5.3).

Axial Chromatic Aberration Characterization

The measured axial chromatic aberration (ACA) of our prototype is

less than that of the typical human eye [Fernandez et al. 2013], confirming that focal cues are correctly rendered with field simultaneous color presentation, in spite of polychromatic illumination. †The SLM optical power was optimized, following Equation 5, for λ = 532 nm. ‡ACA is reported as the apparent optical distance in diopters, measured relative to the green channel. Focal distances are measured using a varifocal camera and a depth from-focus metric (i.e., maximizing contrast for a high-frequency pattern).